The EU AI Act puts an end to the previously unregulated use of AI – including tools like Microsoft Copilot or proprietary AI agents. Since August 2024, it is clear: those who use AI in companies, will need defined roles, documented processes, and traceable logging by 2026 at the latest.

What is the AI Act?

The EU AI Act is the world’s first comprehensive AI regulation and has been in force since August 2024.

The core of the AI Act is a risk-based approach: AI systems are classified into different risk categories based on their area of application and potential impact – from “minimal” to “unacceptable”. The higher the risk, the stricter the requirements: from simple labeling to documentation and control obligations, and even prohibitions, for example, in social scoring or manipulative systems.

It thus becomes clear: AI equally affects not only IT, but also HR, business departments, data

protection, and legal. Inventory lists, responsible roles, risk assessments, and traceable decisions

are no longer optional, but mandatory.

When Do the Regulations Apply?

Companies are already using Copilot, ChatGPT, or proprietary AI agents today – mostly without inventory, risk classification, or documented responsibilities. This is not yet a problem, but the transition period is ending: as of August 2026, most obligations will apply to higher-risk AI systems. Those who have not established governance structures by then risk fines and usage prohibitions.

Brief overview of the most important milestones:

| Timeline | What applies? |

|---|---|

| As of February 2025 | Prohibition of inadmissible AI systems (e.g., social scoring, manipulative systems) and obligation to have basic AI competence (AI literacy). |

| By August 2025 | Requirements for general-purpose AI models (e.g., GPT, Gemini) – transparency, documentation, model cards. |

| By August 2026 | Main deadline: Companies must have implemented AI inventory, risk classification, policies, roles (RACI), approval processes, logging, and monitoring – especially for high-risk applications. |

| As of August 2027 | Additional verification obligations, including for standardized high-risk systems such as medical devices, machines, elevators, etc. |

The period until 08/2026 is not an observation phase, but a implementation phase. Now, inventory lists must be established, high-risk applications identified, responsibilities defined, and initial policies tested. Those who wait lose valuable months and will face hectic compliance projects in 2026.

Policies & Processes – What Must be Documented by 2026

For Copilot, GPT-based assistants, or proprietary AI agents to be operated in compliance with the law from 2026, it is not enough to approve individual use cases. Companies need a documented policy set and clear processes – lean, but binding. The goal is a uniform framework: Who decides on new AI applications, which data may be used, and how is their use documented traceably?

What should the policy set include at a minimum?

These policies only function with clear processes behind them:

Roles According to RACI – who Bears which Responsibility?

For AI systems like Copilot or proprietary agents to be operated responsibly, more than just technology is needed – clear responsibilities are essential. The AI Act does not require job titles, but traceable responsibility. A RACI matrix is perfectly suited for this: Who is Responsible, who is Accountable, who is Consulted, and who is Informed?

Typical roles in the AI governance model:

| Role | Tasks in AI Use |

|---|---|

| Owner / Fachbereich | Meldet Use Cases, dokumentiert Zweck & Nutzen, verantwortet Ergebnisse. |

| IT / platform operation | Technical setup (e.g., Copilot, Azure OpenAI), access controls, integration & operation. |

| Risk / Compliance | Assesses risk, defines control measures, documents risk acceptance. |

| Legal / Data protection | Reviews legal frameworks, licenses, data protection impact assessment. |

| Information security | Evaluates data access, model security, protection against misuse. |

Approval Process – from Idea to Release

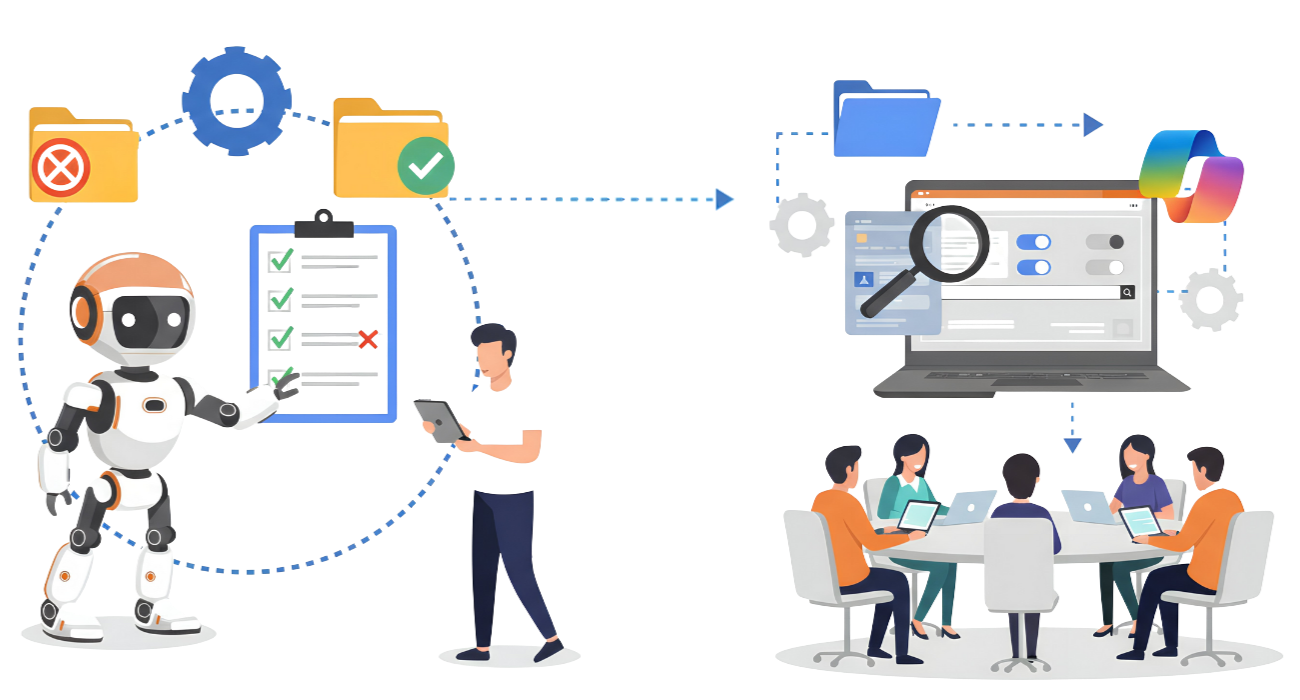

For AI applications like Copilot or proprietary agents to be operated compliantly from 2026, a binding approval process is required. The goal is not bureaucracy, but transparency: Which AI is used where, with what data, by whom is it managed, and with what risk?

Step 1: Submit Use Case Profile

Every new AI deployment is registered with a short, standardized form. Typical contents:

- Purpose & Benefit of the Use Case

- Data Sources and Access Rights

- Affected User or Customer Groups

- Responsible Person in the Business Department (Owner)

- Technical Implementation (e.g., Copilot, Azure OpenAI, internal model)

Step 2: Risk check according to AI Act

After the profile, the use case is classified into the AI Act risk categories:

- Low Risk: e.g., internal assistants without personal reference

- Subject to Transparency: e.g., chatbots, AI-generated content

- High Risk: e.g., AI in HR processes, critical infrastructure, biometric systems

Depending on the classification, appropriate requirements are triggered, e.g., documentation obligation, human oversight, data quality evidence, or entry into the EU database.

Step 3: Approval & Action PlanIT, data protection, legal, and potentially Risk/Compliance review the use case. It may only be used productively after approval. If risks exist but are acceptable, the decision is documented (Risk Acceptance). Missing controls are recorded as measures with a deadline.

Step 4: Operation & verification

After approval, logging, monitoring, and updating of the profile must be ensured. Changes to the use case (new data, new functions) trigger a renewed review.

Practical Example – an Agent with Clear Guardrails

What does compliant AI use look like in practice? Let’s consider an internal agent that answers employees’ questions about products, processes, or policies. The difference from “just turning it on”: The agent works only with approved information, is reviewed, documented, and operated with ongoing support.

Data Access – Only Approved Sources Instead of “Everything Open”

The agent does not receive full access to the company network, but only to clearly defined data sources – for example, product documentation, manuals, and internal FAQs. Systems with sensitive information such as HR data, contracts, or personal customer data are excluded or only accessible via roles with highly restricted permissions. Technically, control is managed through rights in M365, Azure AD, or comparable systems. This ensures traceability of the content the agent accesses.

Approval & Tests – before the Agent Goes Live

Before the agent goes live, the use case is registered via a profile: purpose, data sources, responsible business department, risk assessment. IT, data protection, and potentially compliance review this application. This is followed by a test phase in a secure environment. Here, it is investigated whether the agent generates false content, discloses confidential information, or behaves unpredictably. Only when results are stable and guardrails are effective is approval granted for productive use .

Training & Change – how Employees Work Safely with AI

Even the best agent only functions if people handle it correctly. Therefore, users are trained: Which prompts work? Which data does the agent use? When do I need to review and approve results? This also includes a clear indication that AI supports , but does not replace decisions. In parallel, a feedback channel is established through which errors, new requirements, or suggestions for improvement can be reported. This ensures the agent remains not a one-time project, but a controlled, learning component of the organization.

Ready to Bring Copilot & AI Agents Safely into Operation?

Many companies are now facing the same task: using AI, but in a structured, controlled, and AI Act-compliant manner. We support you precisely where internal resources or experience are lacking. Whether it’s a governance concept, risk analysis, policy development, or a pilot project with Copilot & Agents – we design the process together with your teams. This way, AI evolves from an experiment into an integral part of your operations.